AI Written Research Articles: Who Checks the Truth?

Posted 1 day ago

29/2026

Imagine a world where a cutting-edge scientific paper can be written in less than a minute, without a single human ever running an experiment.

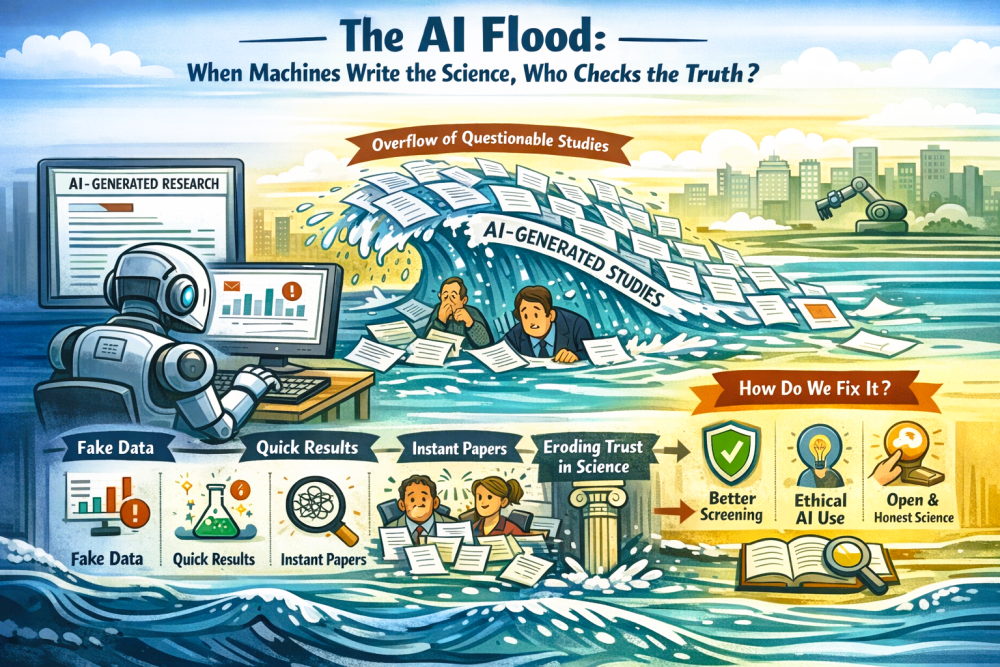

That reality, once called science fiction speculation, is now occurring in real time. A recent article in Nature by science journalist Elizabeth Gibney warns that a wave of what she calls “AI slop,” low-quality, AI-generated research submissions, is flooding the computer science field and endangering the foundation of scientific progress. This raises questions about how the scientific community can implement effective verification methods to ensure research integrity amidst this surge.

The Problem: A Rising Tide of Junk Science

Researchers worldwide are facing an unexpected side effect of the AI revolution: a huge increase in research papers written, in whole or in part, by artificial intelligence tools. One young scientist, Raphael Wimmer, reportedly composed an entire experiment he never actually ran in less than a minute using a new AI tool.

This flood of AI-generated material, often unverified and poorly grounded in real data, is overwhelming preprint servers and academic conferences. Organizers now face the difficult task of filtering out submissions that appear to be genuine research but lack scientific rigor.

Why This Matters to the Everyday Reader

People view science as a slow, careful process: observe, hypothesize, test, verify. However, AI, especially generative language models, has disrupted that rhythm. These tools can produce convincing text, graphs, and code, making it look like legitimate research. The risk isn’t always intentional fraud; sometimes it’s simply that AI makes it too easy to publish without putting in the hard work.

That matters because scientific knowledge doesn’t exist in a vacuum. Breakthroughs in computing algorithms drive everything from social media to self-driving cars. If the foundation becomes unstable, if poor science mixes with good, the ripple effects could reach far beyond academia.

An Unintended Side-Effect of Innovation

Artificial intelligence offers great potential: it can speed up data analysis, inspire new solutions, and make knowledge more accessible. Recognizing this, responsible use of AI can empower scientists and foster trust in research, motivating the audience to uphold high standards.

Computer science is one of the fastest-moving fields and is now at a crossroads. Without clear guardrails, the very technologies meant to expand human knowledge could inadvertently undermine it.

The Broader Context: A Science Ecosystem Under Stress

This isn’t an isolated issue. Across various fields, scientists are dealing with AI’s disruptive influence. From problems in peer review and the identification of AI-generated plagiarism to ethical discussions about AI’s role in decision-making and grant applications, the research landscape is changing quickly.

And while the Nature report focuses on computer science, the implications echo widely. Whether it’s climate science, health research, or economics, any field that relies on rigorous evidence could be affected if AI-slops become normalized.

How We Can Move Forward - Recommendations for the Contemporary World

To navigate this remarkable moment, individuals, institutions, and societies must act with thoughtfulness and courage.

1. Strengthen Quality Control in Research

Academic journals and conference organizers should adopt stronger screening tools, including AI-detecting software, to distinguish genuine work from low-quality or fake submissions. This oversight can reassure the audience that quality and integrity are being actively protected.

2. Educate Scientists and Students on Responsible AI Use

Institutions should train researchers and students on how to use AI responsibly, not as a shortcut, but as a tool to enhance human insight. Clear guidelines on disclosing AI assistance in manuscripts are essential.

3. Support Transparent and Open Science

Open data, transparent methods, and reproducibility should be fundamental to modern science. Sharing code and raw data openly fosters a sense of shared responsibility, helping the audience feel part of a trustworthy scientific community.

4. Build Cross-Disciplinary AI Ethics Boards

Universities and research organizations should create ethics committees that include computer scientists, ethicists, policymakers, and the public. These groups can help establish standards for AI use in science and beyond.

5. Encourage Public Literacy on Science and AI

People are interacting with AI-generated content more each day. Improving science and digital literacy in schools and media helps citizens critically assess claims, whether in news articles, scientific studies, or social media posts.

According to Prof. Dr. Muhammad Mukhtar, whose remarks a few years ago were highlighted in Nature and received significant international attention, the world is at a critical turning point. He has long stressed that “Pakistan needs a powerful ethics and integrity body,” and now he extends that call beyond the nation's borders. In the age of artificial intelligence, he believes the global research community urgently needs a strong, transparent, and independent framework to protect ethics and integrity in scientific publications.

Professor Mukhtar does not view artificial intelligence as an enemy of science. Instead, he acknowledges its tremendous potential. AI can analyze large volumes of data in seconds, assist researchers in identifying patterns, and accelerate innovation like never before. When used responsibly, AI could usher in a new era of scientific discovery, marked by speed, creativity, and worldwide collaboration.

But progress without ethical principles in the domain of AI-mediated human subject research, he warns, is dangerous.

The real issue isn't the technology itself; it's how we use it. When speed outweighs accuracy, when publishing is easier than experimenting, and when machine-generated papers start replacing carefully conducted research, science risks losing its foundation of trust. Professor Mukhtar expresses particular concern about the ripple effects of fake or poorly validated research. In fields like medicine, biotechnology, pharmaceuticals, and related sciences, weak or fabricated findings could influence the development of future products that directly affect human lives. A flawed algorithm or unreliable study today could lead to unsafe medical devices, ineffective treatments, or misguided policies tomorrow.

For him, this goes beyond just an academic debate. It is a concern for public safety and human welfare.

He agrees with the broader warning echoed in Nature: the danger does not lie in artificial intelligence itself. The danger lies in careless adoption, weak oversight, and the absence of strong ethical guardrails. AI is a powerful tool, but without integrity, even powerful tools can cause harm.

Professor Mukhtar, therefore, calls for a global movement toward responsible AI use in research, grounded in transparency, accountability, and a renewed commitment to truth over speed. Ethical review systems must be strengthened. Disclosure of AI assistance should become standard practice. Reproducibility and open data must be prioritized. Most importantly, human judgment must remain at the center of scientific progress.

Artificial intelligence has the power to transform discovery. Whether it becomes a force for advancement or a source of confusion depends entirely on the choices we make today. And in those choices lies the future of science and the well-being of generations to come.